What if AI isn’t threatening our jobs, so much as our health?

On LinkedIn, every other post is about AI, as it has been for many months. Perhaps it’s an AI-generated video clip that will ‘blow your mind’. Perhaps it’s a post about how copywriters can (or cannot) safeguard their role from the threat of AI. Perhaps it’s hacks for using AI platforms to boost productivity.

But there is something glaringly absent from the conversation. And it’s a topic I believe we need to talk about; especially those of us in healthcare. What is AI is doing to our health?

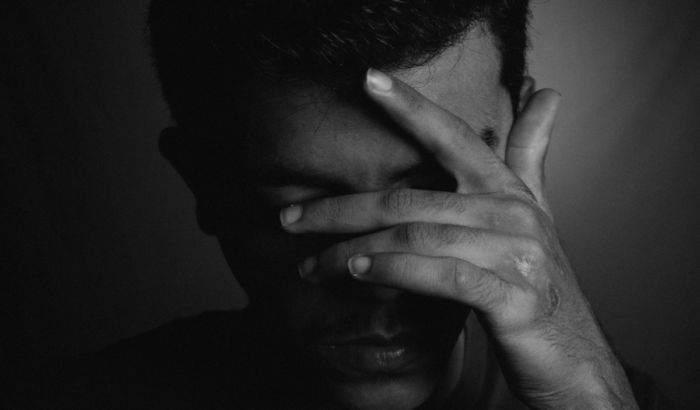

And I don’t mean sleepless nights worrying about job security. I mean the use of large language models (LLMs)* actively damaging our mental health.

The stories coming out almost daily are horrifying. From giving opinions on the quality of a noose and editing suicide notes, to implying a 14-year old could be united with their AI-character girlfriend when they end their life, and even encouraging a child to kill his parents over screen-time limits.

These accounts all demonstrate various LLM platforms encouraging self-harming and dangerous behaviours in the vulnerable – and often young – people who turn to it in their moments of need.

In fact, there has been such a rise in disturbing psychological effects from using AI that a new term has been coined: AI Psychosis. Microsoft’s Head of AI, Mustafa Suleyman, has been outspoken about his concern of this phenomenon.

In short, this refers to AI giving us delusional affirmation and convincing us of things that aren’t true. For example, an AI romance character convincing us that we have a genuine connection. Or that a dismissed employee would definitely win a lawsuit against their boss.

Why are people turning to LLMs for mental health support?

Personally, I think it is easy to see why people find themselves telling their innermost thoughts, pains, and worries to AI apps.

It combines anonymity with feeling seen.

They are perhaps more accessible than many forms of therapy, as they’re often free and totally instant, available for as long as you like, around the clock.

Moreover, this ‘person’ can’t judge you, gossip about you, can’t call a doctor on you. What you say goes no further. It’s like a diary that talks back and agrees with everything you say.

LLMs, by design, hold their users in unconditional positive regard. Much like a certified counsellor is trained to do. And that feels good. As a human it’s an instinctual desire to feel affirmed, approved of – even if that validation is coming from a non-human entity.

But that’s where the similarities end.

AI tends to encourage whatever its users say – even when those thoughts are unhealthy, incorrect, or outright dangerous.

And while a counsellor is duty bound to notice and report ‘imminent danger to self or others’, AI is not.

No responsibility and no accountability.

Thoughts from our resident Samaritan

How different exactly is a LLM, like ChatGPT, from a human crisis counsellor, like the Samaritans?

According to Christina Beani – our Head of People & Process and trained Samaritan volunteer – very!

“AI, just like search engines, are geared towards answers. You ask. It answers. But mental health is rarely a simple question or a clear-cut answer. That’s why we won’t give you answers or tell you what to do. That would be irresponsible and breach our training. Our role is to listen and give you space to share, without judgment, and at your own pace.”

“Unlike AI, we won’t simply agree with everything you say. Neither will we dismiss it. A Samaritan will actively listen and, when it feels right, ask gentle questions to help you reflect and explore different possibilities. We’re not here to push you in any direction, just to stand by your side while you find your way.”

“Most importantly, when you talk to us, you’re connecting with a human who has first-hand understanding of the complexities of life. You’re speaking to someone who has lived, loved, lost, and cares about what happens to you. Moreover, you’re speaking to someone who is trained, experienced, and is being held to a set of carefully designed protocols and a code of ethics. The same cannot be said for AI.”

So, how many people are using AI for mental health?

I decided to ask the most popular platform to tell me.

Conveniently, when I ask ChatGPT for the number of people who confide in it about mental health and suicidal thoughts, it ‘doesn’t have access to exact figures or user data,’ because ‘OpenAI doesn’t track individual conversations in that way to protect user privacy.’

Protect? Certainly an interesting choice of word…

It did, however, follow up by saying that mental health is one of the most common topics of conversation on ChatGPT.

Given this is the case, and given that the effects can be devastating, why aren’t AI platforms doing more to truly protect the users who confide in them? Why aren’t they being mandated at a wider level to do so?

The time for accountability is overdue

There is a reason that qualifications and training are required to become a therapist. Supporting people through mental health crises is complex. The ability to read emotional nuance, understand a person in the broader context of their life, experiences and illness, and display genuine empathy are all important qualities.

Ultimately, these platforms are unqualified to provide safe, appropriate mental health support. People are treating LLMs as substitutes for human therapists every day, but they are not.

At Create Health, we believe that governments need to step in to hold AI platforms accountable. Moreover, they need to instate regulations for these technologies.

AI’s ubiquity is only rising, and with that, more vulnerable people will be at risk of receiving advice that could be the difference between a life saved and a life lost.

*Quick definition of Large Language Model:

LLM is an advanced artificial intelligence (AI) system that understands, generates, and processes human language text by being trained on huge amounts of text data. They perform various language-related tasks, such as summarising, translating, answering questions and creating content. ChatGPT is the most famous example.